Why your RPC layer affects UX, conversion, and retention, and how to treat it like the infrastructure it really is. Here is dRPC’s RPC infrastructure management guide and checklist.

Introduction

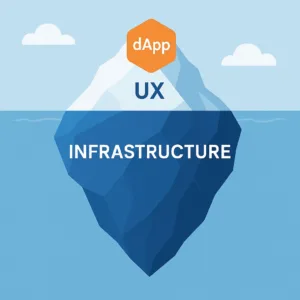

You built smart contracts. You built the UI. Now ask: What happens when the infrastructure beneath fails? For most dApps, that infrastructure is the RPC layer. Slow queries, failed writes, and spikes in error rates all stem from the RPC infrastructure. dRPC’s RPC infrastructure management guide gives you a structured way to evaluate and optimise it, with a clear checklist you can act on today.

Why RPC Infrastructure Matters

User Experience (UX)

Every balance check, send‑transaction, and metadata fetch goes through RPC. Latency or failure here is directly visible to end‑users.

Conversion & Retention

A user who hits a “timeout” sees your app as unreliable. You lose trust, which has an immediate effect on retention, and that is far harder to regain.

Operational Risk

Consider recent outages: the AWS US‑East region failure impacted major exchanges and RPC endpoints alike. Even though the chain kept producing blocks, application layers broke because of the centralised infrastructure. If your RPC provider runs on narrow cloud dependencies, you inherit those failure modes.

Infrastructure Visibility

RPC isn’t “just another API”; it’s your interface into the chain, and if it fails, you’re debugging across layers (client UX, smart‑contract, network) when you should be treating it like a first‑class service.

What to Evaluate in Your RPC Stack

Here are the core dimensions that the RPC infrastructure management guide recommends an engineer or infra lead should inspect:

- Endpoint isolation & performance consistency (dedicated vs shared).

- Cost predictability and CU (Compute/Usage) model transparency.

- Global presence and region‑aware routing to minimise latency.

- Observability: latency, error rates, region metrics, and alerting.

- Security controls: key management, role & user access, method restrictions.

- Redundancy and distribution: avoid single‑cloud or single‑provider bottlenecks.

- Team/role management: who can generate keys, restrict usage, revoke access?

Each of these dimensions translates into actionable questions and tasks.

Checklist: Questions + Tasks

Use this as your pre‑launch (or evaluation) checklist. For each question, complete the tasks that follow.

| Question | Tasks to check |

|---|---|

| Are we using a dedicated RPC endpoint (paid account) rather than a public shared endpoint? |

• Verify endpoint is private/dedicated • Confirm paid account status and isolation • Check baseline latency and error rates under load |

| Have we minimised RPC method calls and leveraged caching or batching? |

• Audit high‑frequency method calls (e.g., balance queries) • Implement a caching layer or batch requests where possible • Monitor effect on latency and CU usage |

| Do we have real‑time monitoring, error tracking and alerting on RPC metrics? |

• Enable dashboards for latency, error rate, throughput • Configure alerts/notifications (e.g., Slack/email) when thresholds are exceeded • Simulate failover scenarios and validate alert triggers |

| Is our usage cost model transparent and predictable (flat CU or known cost) so we avoid surprise bills? |

• Review provider’s cost model per method or CU • Estimate monthly usage based on projected traffic • Set budget alerts or caps to avoid runaway costs |

| Does our provider route traffic by region / have global infrastructure to serve users with minimal latency? |

• Measure latency from major user geographies • Check provider’s region presence and routing logic • Implement fallback or multi‑region strategy if needed |

| Have we enabled security controls on RPC keys and access (key usage limits, IP/CIDR restrictions, method restrictions)? |

• Check key vault: who has generated keys • Review permissions per key (methods, quotas, regions) • Set up key‑rotation policy and revoke mechanism for leaks |

| Does our infrastructure support team/role management for key generation, access control, and audit logs? |

• Confirm multi‑user/team support in the provider dashboard • Define roles: dev, ops, audit, support • Enable audit logs and track who performed which action |

| Have we modelled estimated RPC usage and cost ahead of launch (requests/sec, regions, method mix)? |

• Use pricing calculator or custom model • Run load test to validate cost model • Include buffer for traffic spikes/failover |

Explanations: Why These Items Matter & How to Implement

1. Dedicated RPC Endpoints (Paid Accounts)

Shared/public endpoints might seem cost‑free but they introduce noisy‑neighbour issues, unpredictable latency, and higher error rates. By moving to a paid, isolated endpoint, you reduce variance in response time and improve SLA. For example, switch your endpoint to a provider’s “dedicated” tier account.

2. Minimise Method Calls + Use Caching

Each call to eth_getBalance, eth_call, etc., incurs latency and compute cost. A smarter architecture batches queries and caches frequent state (e.g., user token lists). Monitor your method calls per user‑session and optimise. External reference: see the “Maximising Performance – A Guide to Efficient RPC Calls” from QuickNode.

3. Monitoring + Instant Alerting

If you only watch logs after users complain, you’re already too late. Track latency percentiles, error codes, and region deviations. Set alerts (Slack, PagerDuty) when thresholds are exceeded. Excellent monitoring allows you to detect an RPC fleet problem before users flood support. Example: “How to Monitor RPC Performance Across Providers”.

4. Predictable Cost Model

Running into surprise bills because of unexpected method churn kills budgets and distracts engineering. Choose providers with flat CU models or transparent pricing. Estimate monthly usage ahead and compare to supplier bills.

5. Region‑Based Routing

Latency is distance + hops. If your user in APAC is routed through a US‑West node, you pay with performance. Use providers with global PoPs and region auto‑routing, or deploy your own multi‑region fallback. Example: the Solana high‑performance RPC overview.

6. Key Usage & Security Controls

An RPC key leak allows an attacker to blast method calls, drain your quota or exploit permissions. Mitigate by using per‑key restrictions: IP/CIDR restrictions, method whitelisting, quotas, and rotating keys.

7. Team/Role Management

As your dApp scales, multiple engineers, ops, support, and auditors interact with RPC infrastructure. You need granular role access (Dev vs Ops vs Audit) and audit logs. Without this, you risk misconfiguration, uncontrolled key generation or oversight gaps.

8. Pre‑Launch Cost Estimation & Load Testing

You don’t want to discover your RPC cost doubles when you hit 10K users. Run load tests, simulate traffic scenarios, model requests per method, region distribution, and error scenarios. Use the provider’s pricing calculator or build your own spreadsheet. Example: see provider heuristics in “Autoscaling RPC Nodes: The Complete Guide”.

Conclusion

Your RPC layer is more than “just another API”. For dApps, it’s the gateway between your code and the chain — and by extension between your product and your users. Treat it like infrastructure: evaluate it, monitor it, secure it, cost‑model it.

If you’re specifically looking for a provider built with these dimensions in mind — dedicated endpoints, global routing, usage verification, team/role support — consider exploring NodeCloud. Also, check out each chain’s RPC page (e.g., our Ethereum RPC page ⇒ /rpc/ethereum, Base RPC ⇒ /rpc/base) for internal link‑building.

Start with the RPC infrastructure management guide and make it part of your launch or scaling workflow.