Key Takeaways for Busy Readers

There is no universally “best” RPC infrastructure setup. The right choice depends on how your application sends requests and how your infrastructure is designed.

Managed SaaS RPC platforms optimise for speed of adoption and global frontend traffic, but introduce an external control-plane dependency.

Open source, self-hosted RPC stacks give teams control, extensibility, and sovereignty, and when designed correctly, open source RPC infrastructure can eliminate gateway-level single points of failure entirely.

The most resilient architectures combine multiple providers and multiple gateways, ensuring applications stay online even during partial failures or provider-level incidents.

This guide helps teams evaluate whether managed SaaS or open source RPC infrastructure better matches their architecture and reliability requirements.

Introduction: Skipping the Basics

Every production dApp relies on RPC infrastructure, but this article intentionally skips introductory-level explanations.

If you need a refresher on what RPC nodes and RPC endpoints are, how JSON-RPC works, or how data flows between dApps and blockchains, start here:

This guide assumes you already understand the basics and focuses instead on architecture, failure modes, and real-world reliability tradeoffs that matter to CTOs, infra teams. Devops leads evaluating open source RPC infrastructure versus managed SaaS models.

Throughout this guide, we compare managed SaaS models with open source RPC infrastructure to help teams make architecture-first decisions.

How dApps Actually Use RPC Infrastructure

In practice, dApp teams rely on RPC in one of two ways:

In-house infrastructure

Running and maintaining their own RPC nodes

Managing routing, failover, and observability internally

Third-party RPC services

Outsourcing node operations, routing, and scaling

Connecting via one or more public or private RPC endpoints

Most teams start with third-party services. The architectural question is what kind of third-party model you depend on.

Third-Party RPC Services: Centralised vs Distributed

Not all managed RPC services are architecturally equivalent.

Centralised RPC Services

Centralised RPC providers operate:

Their own node infrastructure

Their own gateways and routing logic

Their own DevOps and operational tooling

From the dApp’s perspective, this looks simple: a single endpoint, minimal setup, fast onboarding.

But architecturally, it means:

A single control plane

A single gateway layer

A single DevOps team

If something goes wrong at the provider level, like infrastructure failure, routing bug, misconfiguration, or operational incident, then your application experiences a full outage, even if the blockchain itself continues producing blocks.

This is the classic external single point of failure.

Distributed RPC Services (NodeCloud Model)

Distributed RPC services take a fundamentally different approach.

Instead of relying on one infrastructure stack, they operate:

Their own gateways and routing layer

Plus dozens of independent node providers

Across different infrastructures, clients, and DevOps teams

Requests are routed dynamically using health-aware logic across providers.

What this means in practice:

If one provider degrades, traffic is shifted elsewhere

If one client implementation misbehaves, others compensate

If part of the infrastructure fails, applications remain online

The single point of failure is reduced to the provider’s gateway and control plane, while the infrastructure behind it becomes significantly more resilient. This is the model used by dRPC’s NodeCloud.

This distinction is critical when comparing distributed SaaS platforms with open source RPC infrastructure, where control of the gateway layer can be fully decentralised.

Where the Single Point of Failure Really Lives

Understanding where the single point of failure lives is essential when evaluating open source RPC infrastructure versus managed SaaS platforms. This is where many explanations stop, and where nuance matters.

Managed SaaS RPC

Single point of failure sits outside your organisation

You depend on:

The provider’s gateway

The provider’s routing logic

The provider’s operational decisions

Even with distributed nodes behind the scenes, you do not control the gateway layer.

Open Source, Self-Hosted RPC (Naive Setup)

If you self-host an open source RPC stack with:

One gateway

One routing layer

You move the single point of failure into your own infrastructure.

This is better for sovereignty, but not yet optimal.

Open Source, Self-Hosted RPC (Correctly Designed)

Here is the critical nuance that is often missed.

In a correctly designed self-hosted setup, gateways themselves are no longer singular components.

With an open source RPC stack, you can design the control plane as a multi-gateway system, not a single chokepoint.

In practice, this means you can:

Run multiple independent gateways

Deploy them across different regions or environments

Mix gateway types, including:

Your own gateways

Centralized third-party gateways

Distributed third-party gateways

Route traffic across a shared routing layer toward:

Your own nodes

External RPC providers

Distributed networks like dRPC’s NodeCloud

If one gateway fails, degrades, or is taken offline, requests are transparently routed through another gateway.

This does not remove failure entirely. Instead, it redefines the failure domain.

The single point of failure no longer lives inside a third-party provider’s control plane.

It becomes application-owned, distributed, and replaceable.

At that point:

No single provider controls availability

No single gateway can take your application offline

Censorship, outages, and targeted failures become significantly harder

This is the architecture enabled by open source, self-hosted RPC stacks like dRPC’s NodeCore, and it is fundamentally different from both managed SaaS RPC and naive self-hosted deployments. This is why properly designed open source RPC infrastructure offers stronger guarantees than both centralized and distributed SaaS models.

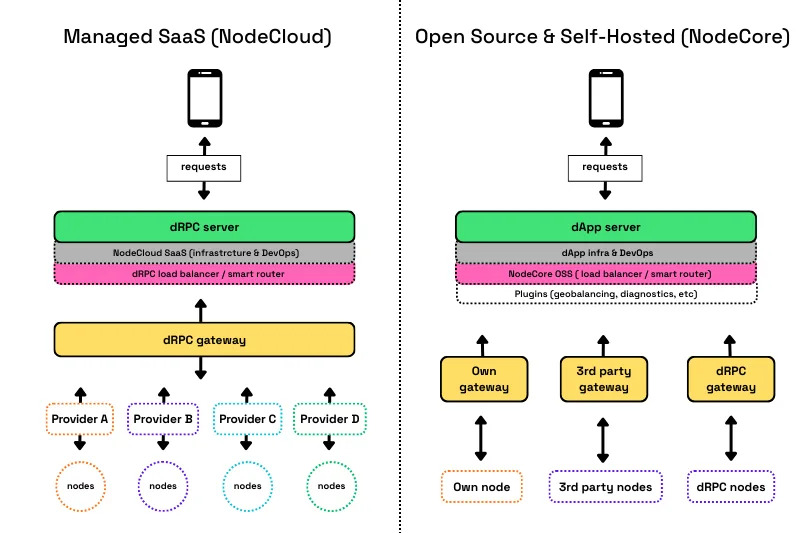

Visual Comparison: Distributed SaaS vs Decentralised Self-Hosted

This diagram illustrates:

Managed SaaS: one external gateway, distributed providers behind it

Self-hosted OSS: application and gateway live together, with multiple gateways routing across multiple providers

The key difference is not just where nodes live, but who controls the control plane.

When Managed SaaS RPC Is the Right Choice

Managed SaaS RPC infrastructure makes sense when:

A large portion of traffic originates from end-user browsers or wallets

Requests come from unpredictable, global locations

You want instant global coverage without managing infra

You accept an external control-plane dependency in exchange for simplicity

In these cases, running your own gateways close to users is impractical.

Distributed SaaS RPC is the right abstraction.

This is where dRPC’s NodeCloud excels.

When Open Source RPC Infrastructure Is the Right Choice

An open source RPC stack is the right choice when:

Most requests originate from backend services

Infrastructure runs in one or a few known regions

You require:

Custom routing logic

Fine-grained observability

Compliance or auditability

You want to eliminate external single points of failure

With multiple gateways and multiple providers, you gain fault tolerance and control that SaaS platforms cannot offer.

Combining Providers: The Most Robust Pattern

One of the most powerful aspects of open source RPC infrastructure is composability at both the gateway and provider layers.

With NodeCore, teams can:

Route traffic across:

Their own nodes

Existing third-party RPC providers

dRPC’s distributed node provider network

Apply deterministic routing rules

Route around partial failures automatically

This pattern mirrors industry best practices seen in client libraries like Ethers.js FallbackProvider and Viem’s fallbackTransport, but implemented at the infrastructure layer, not just the client.

For reference:

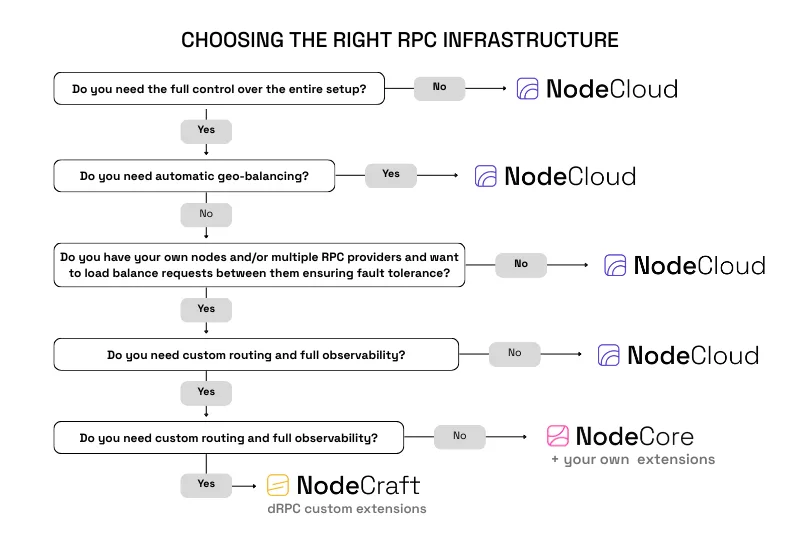

Decision Support: SaaS vs Open Source vs Hybrid

How to Read This Decision Matrix

This decision matrix is designed for CTOs, infrastructure leads, and DevOps teams who already understand RPC fundamentals and need to make a control-plane decision, not a tooling comparison.

The goal of this section is not to promote a specific product, but to help teams objectively assess whether managed SaaS or open source RPC infrastructure best matches their service architecture and reliability requirements.

It does not compare features.

It maps traffic origin, control requirements, and failure tolerance to the RPC model that best fits your architecture.

Follow it top-down.

1. Do You Need Full Control Over the Entire Setup?

This is the primary fork.

If you do not need to control routing logic, failover behavior, or gateway operations, a managed SaaS RPC model is already the correct abstraction.

In that case, dRPC’s NodeCloud is the right choice.

If you do need full control over how requests are routed, how failures are handled, and where dependencies live, you move toward a self-hosted model.

This decision has nothing to do with team size.

It is about ownership of the control plane.

2. Do You Need Automatic Geo-Balancing?

This question determines where your traffic originates.

If a significant portion of your RPC requests come from:

browsers

wallets

globally distributed users

Then automatic geo-balancing close to end users is mandatory.

Operating that reliably yourself is impractical for most teams.

This is where managed, distributed SaaS RPC is the correct solution.

This is why frontend-heavy dApps, even very sophisticated ones, almost always rely on NodeCloud.

3. Do You Operate Your Own Nodes or Multiple RPC Providers?

This step identifies infrastructure maturity and intent.

If you already:

run your own nodes

pay for multiple RPC providers

want to actively distribute traffic between them

Then SaaS abstractions start to become limiting.

At this point, the problem is no longer “getting RPC access”. It is controlling how that access behaves under failure.

This is where dRPC’s NodeCore becomes relevant.

4. Do You Need Custom Routing and Full Observability?

This is the final fork.

If you want:

deterministic routing rules

method-level observability

full transparency into request paths

And you are comfortable building and maintaining extensions internally, NodeCore gives you the open-source foundation to do so.

If you need those same capabilities without building an internal infrastructure team, or you require advanced setups such as:

compliance-aware routing

custom auth systems

multi-region gateway deployments

advanced monitoring and alerting

Then NodeCraft builds these capabilities on top of NodeCore for you.

This is not about technical ability.

It is about where you want to spend engineering time.

What This Matrix Ultimately Shows

There is no universally “best” RPC infrastructure.

NodeCloud is optimal when traffic is global and frontend-driven.

NodeCore is optimal when traffic is backend-driven and control matters.

NodeCraft exists when NodeCore is the right model, but custom production requirements exceed internal bandwidth.

The correct choice depends on:

where requests originate

who owns the gateway

how much failure you are willing to externalize

This matrix exists to make that decision explicit.

How dRPC’s NodeCloud and NodeCore Fit Together

This guide is not about picking sides.

It’s about understanding tradeoffs.

For a direct comparison of both models and how teams transition between them, see:

Many teams:

Start with NodeCloud for speed and global reach

Introduce NodeCore as infra becomes strategic

Combine both for maximum resilience

Take-Away

RPC infrastructure failures rarely happen because chains stop producing blocks.

They happen because control planes fail.

Managed SaaS moves that risk outside your organisation.

Open source, self-hosted stacks let you own and decentralise it.

When designed with multiple gateways and multiple providers, a self-hosted open source RPC infrastructure offers:

Higher resilience

Greater sovereignty

Better censorship resistance

Stronger guarantees that your application stays online

The right choice depends on how your dApp is built, not how big your team is.

For teams building production-grade systems, open source RPC infrastructure is increasingly becoming the default reliability standard.

FAQs

What is the difference between managed SaaS RPC and open source self-hosted RPC infrastructure?

Managed SaaS RPC platforms operate the gateway, routing logic, and node infrastructure on behalf of applications. Open source self-hosted RPC infrastructure places the gateway and control plane inside the application’s own infrastructure, enabling full control over routing, observability, and failure domains.

Where does the single point of failure exist in managed RPC platforms?

In managed SaaS RPC platforms, the single point of failure sits at the provider’s gateway and control plane. Even if the provider runs distributed nodes, applications remain dependent on that external gateway and its operational reliability.

Does self-hosting RPC infrastructure automatically remove single points of failure?

No. A naive self-hosted setup with a single gateway simply moves the single point of failure into the application’s own infrastructure. Eliminating gateway-level failure requires running multiple gateways and routing traffic between them.

How do multiple gateways improve RPC reliability and censorship resistance?

Multiple gateways allow traffic to be routed around gateway-level failures. If one gateway is degraded, blocked, or unavailable, requests can be served through another. This significantly improves uptime, resilience, and resistance to targeted outages or censorship.

When is managed SaaS RPC the right choice?

Managed SaaS RPC is the best choice when a large portion of traffic originates from globally distributed user devices such as browsers and wallets, when instant global coverage is required, and when teams prefer not to operate infrastructure internally.

When is an open source self-hosted RPC stack the better option?

An open source self-hosted RPC stack is better suited when most traffic originates from backend services, infrastructure runs in one or a few controlled regions, and teams require custom routing logic, deep observability, compliance guarantees, or full control over failure domains.

Can a self-hosted RPC stack use multiple providers at the same time?

Yes. A properly designed open source RPC stack can route traffic across in-house nodes, third-party RPC providers, and distributed networks simultaneously, automatically routing around partial failures.

Is team size a deciding factor when choosing RPC infrastructure?

No. The deciding factors are request origin, traffic distribution, and infrastructure topology. Team size is largely irrelevant compared to how and where RPC requests are generated.