Introduction

Latency is one of the most underestimated performance factors in blockchain applications. While developers often focus on gas fees, throughput, or block times, the speed at which applications communicate with blockchain nodes is just as critical. This communication happens through Remote Procedure Calls, commonly known as RPC.

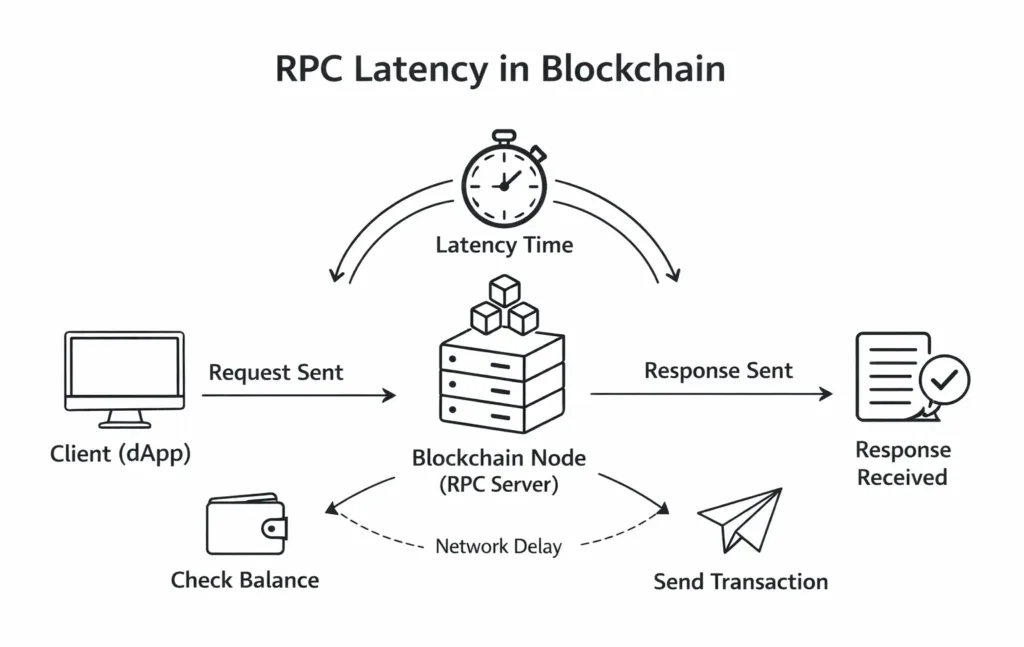

RPC latency refers to the delay between sending a request to a blockchain node and receiving a response. Every wallet balance check, transaction submission, smart contract read, or event query depends on this interaction. When latency is high, users experience slow loading interfaces, delayed confirmations, and unreliable application behavior.

This article explains what RPC latency is in a blockchain context, why it matters for modern dApps, how developers can measure it accurately, and what infrastructure choices help reduce it in production environments.

What Is RPC Latency in Blockchain

RPC latency is the amount of time it takes for a blockchain node to respond to a request sent by a client application. The request can be anything from querying an account balance to reading smart contract state or broadcasting a transaction.

In practical terms, RPC latency measures how fast your application can talk to the blockchain.

When a request is sent, several steps occur:

The request travels from the client to an RPC endpoint

The node processes the request and fetches data from its local state

The response is serialized and sent back to the client

The total time taken across these steps is the RPC latency.

In real world applications, this latency directly affects how responsive a dApp feels. A balance query that takes fifty milliseconds feels instant. A query that takes two seconds feels broken.

RPC latency is not the same as block time. Block time determines how fast blocks are produced. RPC latency determines how fast applications can read or write data to the chain.

Why RPC Latency Matters for dApps

User experience and responsiveness

For end users, RPC latency translates directly into perceived performance. Slow RPC responses cause wallets to hang, dashboards to load slowly, and transactions to appear stuck.

A decentralized application can have perfect frontend design, but if RPC responses take seconds to return, users will assume the application is unreliable.

Transaction reliability

High latency can cause duplicate submissions, timeouts, or missed confirmations. In trading or NFT minting scenarios, this can result in lost opportunities or failed transactions.

In DeFi environments, even small delays can impact execution price, arbitrage opportunities, or liquidation timing.

Developer productivity

From a development perspective, slow RPC responses make debugging harder and testing more frustrating. Developers depend on fast feedback loops when iterating on smart contracts or backend logic.

Infrastructure stability

Latency spikes often indicate overloaded or poorly optimized infrastructure. Monitoring RPC latency provides early signals of scaling issues before full outages occur.

Common Causes of High RPC Latency

RPC latency is influenced by multiple factors, often outside the control of the application itself.

Geographic distance

The physical distance between the client and the node affects network round trip time. Requests sent across continents will naturally take longer than regional traffic.

Shared public endpoints

Public RPC endpoints are shared across thousands of users. During traffic spikes, nodes become overloaded, increasing response times or dropping requests.

Node performance and indexing

Nodes that are not fully synced, poorly indexed, or running on underpowered hardware respond more slowly to queries.

Network congestion

Congested networks can slow down request handling, especially during peak activity or major events.

Inefficient request patterns

Excessive polling, large payloads, or unoptimized calls increase latency and strain both client and node resources.

How to Measure RPC Latency

Measuring RPC latency accurately requires timing requests from the client side and observing response delays over time.

Basic latency measurement using curl

A simple way to measure RPC latency is to time a JSON RPC request using curl.

curl -X POST https://your.rpc.endpoint \

-H "Content-Type: application/json" \

-d '{"jsonrpc":"2.0","method":"eth_blockNumber","params":[],"id":1}' \

-w "\nTotal time: %{time_total}s\n"

This command measures total request time including network transfer and server processing.

Measuring latency in Node.js

Developers often measure latency programmatically to track performance in real applications.

const fetch = require("node-fetch")

async function measureLatency() {

const start = Date.now()

await fetch("https://your.rpc.endpoint", {

method: "POST",

headers: { "Content-Type": "application/json" },

body: JSON.stringify({

jsonrpc: "2.0",

method: "eth_blockNumber",

params: [],

id: 1

})

})

const duration = Date.now() - start

console.log(`RPC latency: ${duration} ms`)

}

measureLatency()

This approach allows teams to log latency continuously and correlate it with user experience metrics.

Continuous monitoring and dashboards

For production systems, latency should be monitored continuously. Many teams integrate RPC metrics into observability stacks integrating tools like Prometheus and Grafana, or plug-n-play advance diagnostics like dRPC’s NodeHaus dashboard – check the tour.

Dedicated RPC providers often expose latency metrics and request breakdowns directly in dashboards.

What Is a Good RPC Latency Benchmark

There is no universal perfect number, but practical benchmarks exist.

Local or same region RPC connections typically respond under one hundred milliseconds.

Regional connections usually fall between one hundred and three hundred milliseconds.

Global connections across continents often range between three hundred and six hundred milliseconds.

Consistency matters more than raw speed. A stable two hundred millisecond response is better than a fifty millisecond response that occasionally spikes to several seconds.

RPC Latency vs Throughput and Block Time

It is important not to confuse RPC latency with other blockchain performance metrics.

Block time defines how often new blocks are produced. It does not guarantee fast data access.

Throughput defines how many transactions the network can process. It does not determine how quickly applications can query state.

RPC latency specifically measures read and write access speed between applications and nodes.

All three metrics must be considered together when designing scalable Web3 systems.

How dRPC Minimizes RPC Latency

dRPC operates globally distributed RPC infrastructure designed to minimize latency for developers and applications.

Key architectural principles include:

- Multi region endpoint deployment to reduce geographic distance

- Intelligent routing that directs requests to the fastest available node

- Decentralized provider networks that avoid single point congestion

- Load balancing that prevents traffic spikes from degrading performance

This approach ensures consistent response times even during high demand events.

Developers looking to understand the broader role of RPC infrastructure can also explore the internal guide What Are RPC Nodes and Endpoints? A Complete Guide on the dRPC blog.

Best Practices to Reduce RPC Latency in Your dApps

- Choose RPC endpoints closest to your users

- Avoid excessive polling and prefer event driven updates

- Cache frequently accessed data where possible

- Monitor latency continuously rather than reacting to outages

- Use dedicated or decentralized RPC providers instead of shared public endpoints

Teams building multi chain applications may also benefit from reviewing Building dApps on Multiple EVM Chains with RPC Infrastructure, which explains how infrastructure design impacts performance across networks.

Take-Away

RPC latency is a foundational performance metric in blockchain applications. It determines how responsive, reliable, and scalable a dApp feels to users and developers alike.

Understanding what RPC latency is, how to measure it, and what causes it empowers teams to make better infrastructure decisions. Measuring latency early and monitoring it continuously prevents performance issues from becoming user facing failures.

By using globally distributed, decentralized RPC infrastructure such as dRPC, teams can significantly reduce latency, improve reliability, and deliver faster Web3 experiences at scale.

FAQs

What is RPC latency in blockchain

RPC latency is the delay between sending a request to a blockchain node and receiving a response. It directly impacts application speed and reliability.

What causes high RPC latency

Common causes include geographic distance, overloaded public endpoints, poor node performance, and inefficient request patterns.

How can developers measure RPC latency

Developers can measure latency using tools like curl, application level timers, or monitoring dashboards integrated into production systems.

What is a good RPC latency benchmark

Under one hundred milliseconds is ideal for local access. Under three hundred milliseconds is acceptable for regional access. Consistency matters more than peak speed.

How does dRPC reduce blockchain RPC latency

dRPC uses globally distributed, load balanced, decentralized RPC infrastructure to ensure fast and consistent responses across regions.